On the evening of June 2nd, Huang Renxun announced at the 2024 China Taiwan International Computer Exhibition that Nvidia will break the Moore's Law, planning to update the GPU once a year, and disclosed the roadmap for the next three generations of data center semiconductor technology.

In addition, Huang Renxun announced that the Blackwell chip released last year is now in production, and the Blackwell Ultra will be launched in 2025. The next-generation AI chip architecture platform is named Rubin, which uses HBM4 chips and is expected to be launched in 2026.

The Rubin chip series will include new GPUs, CPUs, and network processors. A new CPU named Versa will be used to enhance AI capabilities. The GPU is crucial for AI applications and will use the next-generation high-bandwidth memory from industry giants such as SK Hynix, Micron, and Samsung. Although people are excited about the launch of the Rubin platform, Huang Renxun only revealed limited information about the specific functions and capabilities of the platform.

At the same time, Huang Renxun also announced the launch of the reasoning model microservice NVIDIA NIM, which can be deployed on the cloud, data center, or workstation, and developers can easily build generative AI applications for Copilot, ChatGPT, etc., reducing the required time from several weeks to a few minutes. In addition, Nvidia launched an AI assistant for the GeForce RTX AI computer platform, G-Assist and NVIDIA ACE (Digital Human Technology) NIMs for digital humans, and a small language model (SLM) for RTX-accelerated APIs for Microsoft Windows Copilot Runtime, and so on.

Advertisement

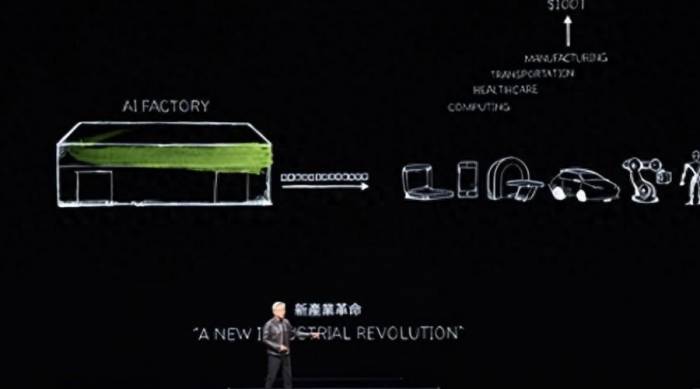

"Today, we are at the forefront of a major shift in the field of computing. The intersection of AI and accelerated computing will redefine the future," Huang Renxun emphasized that in the future, the global AI industry scale will reach 100 trillion US dollars, which is more than 330,000 times higher than the 300 billion US dollars of the previous IT era, with a strong market prospect. The new round of industry revolution led by Huang Renxun, centered on AI, has begun. From CUDA software to GPU hardware, Nvidia is "accelerating everything."

Consumer Innovation: Artificial Intelligence on Personal Computers and Gaming

Since the launch of the first consumer-level graphics processor GeForce RTX designed for AI in 2018, Nvidia has been a pioneer in the field of personal computer AI. This innovation includes Deep Learning Super Sampling (DLSS), which significantly improves gaming performance by using AI to generate pixels and frames. Since then, Nvidia has expanded its AI applications to various fields such as content creation, video conferencing, live broadcasting, streaming video consumption, and productivity.

Now, Nvidia is in a leading position in the emerging generative AI use cases, with more than 500 AI applications and games accelerated by RTX GPUs. At the China Taiwan International Computer Exhibition, Nvidia announced new GeForce RTX AI laptops launched by major manufacturers such as ASUS and MSI. These laptops will be equipped with advanced AI hardware and Copilot features, supporting AI-supported applications and games.

These laptops utilize the latest Nvidia AI libraries and SDKs, optimized for AI inference, training, gaming, 3D rendering, and video processing. Compared with Mac, their stable diffusion speed has increased by 7 times, and the inference speed of large language models has increased by 10 times, marking a significant leap in performance and efficiency.Nvidia has also launched the RTX AI toolkit to help developers customize, optimize, and deploy AI capabilities within Windows applications. It includes tools for fine-tuning pre-trained models, optimizing them for various hardware, and deploying them for local and cloud inference. According to Nvidia AI PC Product Director Jesse Clayton, the AI inference manager will allow developers to integrate hybrid AI capabilities into their applications, making decisions between local and cloud inference based on system configuration.

"We believe that artificial intelligence on personal computers is one of the most significant developments in the history of technology," said Clayton. "Artificial intelligence is being integrated into every major application, and it will impact almost every personal computer user."

In the gaming field, AI is widely used to render environments through DLSS and enhance non-player characters or NPCs. The launch of Project G-Assist will help developers create AI assistants for games. G-Assist enhances the gaming player experience by providing context-aware responses to in-game queries, tracking system performance during gameplay, and optimizing system settings.

Furthermore, Microsoft and Nvidia are collaborating to enable developers to create Windows native and web applications with AI capabilities. This collaboration, set to launch later this year in a developer preview, will allow API access to GPU-accelerated small language models (SLM) and retrieval-augmented RAG success functions running on devices as part of the Windows Copilot Runtime. SLM opens the door for Windows developers working on content summarization, content generation, task automation, and more.

Accelerated Computing: Blackwell Platform and AI Factories

Gen AI is driving a new industrial revolution, transforming data centers from cost centers to AI factories. These data centers utilize accelerated computing to process large datasets and develop AI models and applications. Nvidia announced the launch of the Blackwell platform earlier this year, achieving a significant leap in AI performance and efficiency. Blackwell's performance is 1,000 times higher than the Pascal platform released eight years ago.

Nvidia's AI capabilities extend beyond chip-level advancements. Nvidia continuously innovates at various levels of the data center to improve AI factories. Nvidia's MGX platform provides a modular reference design for accelerated computing across a range of use cases, from remote visualization to edge supercomputing.

The platform supports multiple generations of hardware, ensuring compatibility with new accelerated architectures like Blackwell. According to Nvidia Accelerated Computing Director Dion Harris, the adoption rate of the MGX platform has significantly increased, with the number of partners growing from six to 25.

Nvidia announced the launch of the GB200 NVL2 platform, designed to bring generative AI capabilities to every data center. The platform delivers 40 petaflops of AI performance in a single node, 144 Arm Neoverse CPU cores, and 1.3 TB of fast memory. It significantly improves the performance of LLM and data processing tasks compared to traditional CPU systems. GB200 NVL2 increases data processing speed by 18 times and the performance of vector database search queries by 9 times.

"Our platform is a true AI factory, encompassing compute, networking, and software infrastructure. It significantly improves the performance of LLM and data processing tasks compared to traditional CPU systems," said Harris.Network: Spectrum-X Ethernet for AI

Nvidia's Spectrum-X is an Ethernet network designed specifically for AI factories. Traditional Ethernet networks are optimized for hyperscale cloud data centers, but they are not suitable for AI due to their minimal server-to-server communication and high tolerance for jitter. In contrast, AI factories require robust support for distributed computing.

Spectrum-X is Nvidia's end-to-end Ethernet solution, leveraging network interface cards (NICs) and switches to optimize GPU-to-GPU connections. According to Amit Katz, Vice President of Nvidia's Networking Products, it significantly improves performance by providing higher bandwidth, greater full-duplex bandwidth, better load balancing, and 1,000 times faster telemetry speeds for real-time AI application optimization.

"The network determines the data center," Katz said. "Spectrum-X is the key to Ethernet-based AI factories, and this is just the beginning. The amazing one-year roadmap for Spectrum-X is about to be launched."

Spectrum-X is already in production, and an increasing number of partners are deploying it in AI factories and generative AI clouds worldwide. Partners are integrating the BlueField-3 SuperNIC into their systems and offering Spectrum-X as part of Nvidia's reference architecture. At the Computex in Taiwan, Nvidia will demonstrate Spectrum-X's ability to support AI infrastructure and operations across industries.

Enterprise Computing: NIM Inference Performance

Nvidia NIM is a set of microservices designed to run the next generation of AI models, specifically for inference tasks. NIM is now open to all developers, including existing developers, enterprise developers, and AI beginners.

Developers can access NIM (provided in standard Docker containers) from Nvidia's portal or popular model repositories. Nvidia plans to offer developers free access to NIM through its Nvidia Developer Program for research, development, and testing. NIM integrates with different tools and frameworks, making it accessible regardless of the development environment.

"AI no longer has to be limited to those who understand data science," said Manuvir Das, Vice President of Nvidia's Enterprise Computing. "Any AI developer, any new enterprise developer, can focus on building application code. NIM takes care of all the underlying pipelines."

NIM addresses two main challenges: optimizing model performance and simplifying deployment for developers. It includes Nvidia's runtime libraries to maximize model execution speed, tripling throughput compared to off-the-shelf models. This improvement allows for more efficient use of AI infrastructure, generating more output in a shorter amount of time. According to Das, NIM simplifies deployment by reducing the time required to set up and optimize models from weeks to minutes.The launch of NIM marks a significant milestone, with over 150 partners integrating it into their tools. Nvidia offers free access to NIM through its developer program. For enterprise production deployment, the price of an Nvidia AI Enterprise license is $4,500 per GPU per year.

Industrial Digitalization: Omniverse and Robotics

Due to advancements in artificial intelligence and digital twin technology, the manufacturing industry is undergoing industrial digitalization. Over the past decade, Nvidia has developed the Omniverse platform, which the company claims has reached a critical point in industrial digitalization. Omniverse connects with key industrial technologies such as Siemens and Rockwell, offering simulation, synthetic data generation for AI training, and high-fidelity visualization capabilities.

Nvidia has adopted a three-computer solution in the field of artificial intelligence and robotics: an AI supercomputer for creating models in data centers, a runtime computer for real-time sensor processing of robots, and an Omniverse computer for digital twin simulation. This setup allows for extensive virtual testing and optimization of AI models and robotic systems.

Deepu Talla, Vice President of Nvidia Robotics and Edge Computing, said, "Training and testing robots in the real world is an extremely challenging problem. It is costly, unsafe, and time-consuming. We can have hundreds of thousands or even millions of robots to simulate digital twins. Many of these AI capabilities can be tested in simulation before being brought into the real world."

At the Computex in Taiwan, Nvidia demonstrated how leading electronics manufacturers such as Foxconn, Delta, Pegatron, and Wistron have adopted AI and Omniverse to achieve factory digitalization. These companies use digital twins to optimize factory layouts, test robots, and improve worker safety. Nvidia will also showcase how companies like Siemens, Intrinsic, and Vention are using their Isaac platform to manufacture robots.